My stack for programming language creation

The tech I'm using to create the Poly programming language

As I mentioned in the last post, I’ve attempted to make the Poly programming language a few times. The first time, I tried to create Poly in JavaScript. The second time, I used TypeScript. These initial efforts proved to not be tractable, and I attribute that to a mix of factors including my implementation language not providing the right features to make the task scaleable and my software architecture not being robust enough.

To create a programming language and the tools to compile and interpret the language, this is a brief list of what I now consider necessary.

Implementation language needs:

a rock-solid static type system (types are checked at compile time, not run time)

exhaustive pattern matching (types are matchable in patterns; see below)

optional: compilation to JavaScript (to develop tooling that runs on the web)

Software architecture needs:

copious amounts of unit tests to ensure new language features work and don’t regress with later changes

optional: a visual system to iteratively see how code is parsed and lay down unit tests based on observed behavior

Implementation language

Once the programming language toolchain is created, future versions of the tooling can be written with the new programming language itself! But first we have to construct the language with a different implementation language. Using the requirements outlined above, I have chosen ReasonML as my original implementation language for Poly and its toolchain.

ReasonML is a variant of OCaml, a proven language with decades of usage originally intended for interactive proofs. ReasonML uses a toolchain called Rescript (formerly BuckleScript) to compile the resulting code down to JavaScript. ReasonML also has tight community integrations with the popular React framework, allowing for easier creation of visual systems.

In addition to strong static typing, ReasonML allows you to specify detailed type schemas for your variables and then specify behavior based on matched patterns. The compiler will chide you if you duplicate patterns or don’t exhaustively provide patterns to satisfy the whole type schema. For instance, if your programming language supports simple arithmetic, you might define a mathematical expression in your language as follows:

type expression =

| PLUS(expression, expression)

| MINUS(expression, expression)

| MULTIPLY(expression, expression)

| DIVIDE(expression, expression)

| NUMBER(float);This is saying that an expression can either be a basic operator (plus, minus, multiply, or divide) which takes two expressions (a left side and a right side) or a number. You might express “2 + 3 * 5” as follows:

PLUS(NUMBER(2), MULTIPLY(NUMBER(3), NUMBER(5)))To evaluate the expression, you could provide a method that iterates over all possible types using pattern matching:

let rec evaluate = (e: expression): float => {

switch (e) {

| PLUS(e1, e2) => evaluate(e1) +. evaluate(e2)

| MINUS(e1, e2) => evaluate(e1) -. evaluate(e2)

| MULTIPLY(e1, e2) => evaluate(e1) *. evaluate(e2)

| NUMBER(num) => num

}

}This recursive function takes in an expression and returns a single float, or decimal number, as a result. It takes the given expression and iterates through each possible type it could have, evaluating it by calling itself on the operands and applying the correct mathematical operator. But wait… this compiler is throwing a warning!

You forgot to handle a possible case here, for example:

DIVIDE (_, _)We forgot to handle “DIVIDE” in our evaluate function above. ReasonML leverages its understanding of our type schema to provide hints about where we are lacking functionality. This is an example of the kind of pattern matching that I find crucial for programming language creation: the compiler ensures we have not forgotten to handle anything. Other typed languages in the JavaScript ecosystem like TypeScript lack this level of sophistication. Creating compilers that convert source code to schemas representing the program is much easier with pattern matching. While I don’t envision the syntax being identical, I aim for Poly to have similar pattern matching mechanisms.

Honorable mentions

Although I ultimately chose ReasonML, I did not take the decision lightly. There are other languages that would’ve likely been just as effective, but I mainly chose ReasonML due to its backing by an established programming language (OCaml) and its JavaScript-esque syntax with which I’m most familiar. Here’s a non-exhaustive list of other languages that I had considered:

Elm: a language that resembles Haskell that compiles to JavaScript. I’ve been following this language closely since it was released in 2012 and has well-designed features like clear error messages.

PureScript: another language that also resembles Haskell that compiles to JavaScript.

Rust: this language does not compile to JavaScript directly, but is an exciting language for backend development. I’ve considered Rust for making an extremely fast compiler and even taken a stab at implementing something briefly. Ultimately, it’s a bit too low-level for me to be productive, and not compiling to JavaScript directly is a downside. Maybe one day Poly can have a compiler target for fast, native machine code.

Software architecture

It’s still early in the development process, but the architecture I’m considering has several stages:

Lexing: the process of going from individual characters to concrete tokens. If English were the language we were creating, tokens would be words. Example tokens for the Poly language I’m creating include “EQUALS,” “RIGHT_PAREN,” and “LET.”

Parsing: the process of transforming tokens into a tree-like representation of the program called a syntax tree.

Interpreting/compiling: This is the stage where you do something with the syntax tree. Interpreting means you run the program in a managed environment based on the tree’s instructions (that’s what the “evaluate” method above did). Compiling is more complex and involves potentially optimizing the syntax tree to be more concise/efficient and then translating it to another language. Compiling traditionally means translating source code to machine code, but with a language like Poly, compiling would entail outputting JavaScript, HTML, and CSS to make the application function on the web without any dependencies.

While I’m still working out the details on the later stages, each concrete stage will be represented by a folder in my source code that contains supporting modules. I’m working primarily on the lexer at the moment and have a folder called “lexer” with subfiles like:

Token.re: specifies all possible tokens as types

Context.re: defines a lexing context object, which has all data needed to handle the steps of lexing at any given moment, and supporting methods. The context has information on the raw source code, the current source position, tokens and errors encountered thus far, and more.

Consume.re: contains methods for consuming chunks of the source code. For instance, a “whitespace” consume method advances the position of a lexer context object past whitespace like spaces, tabs, and newlines. There are additional methods for parsing variable names, numbers, and more.

Lexer.re: the primary module that handles lexing. There are functions for regular lexing and text lexing (i.e. lexing text inside quotes). These can be seen as lexing modes. The default mode is regular lexing, but when quotes are encountered, the mode switches to text lexing. If a string interpolation expression is encountered in text lexing mode, the mode switches to regular lexing again, with an argument indicating we’re in a nested mode.

Note that there are established tools like Lex and Yacc (and even versions tuned for OCaml that would work in ReasonML) for creating lexers and parsers. I have tried to wrap my head around these tools for a long time. Ultimately, I am after a level of control of the parsing process that I feel automated tools don’t offer me. If I want to extend the error-reporting system later, for instance, that seems a lot harder working with generated files from tools. Plus, it’s fun to learn and build a system from scratch.

A visual, iterative system for language creation

While I am primarily creating a text-based programming language, I have given a lot of thought to the design of programming language creation. Ideally there would be established tools that provide visual feedback for drafting programming languages. I have not been able to find something that I like, so like many programming language creators before me, I am rolling my own system.

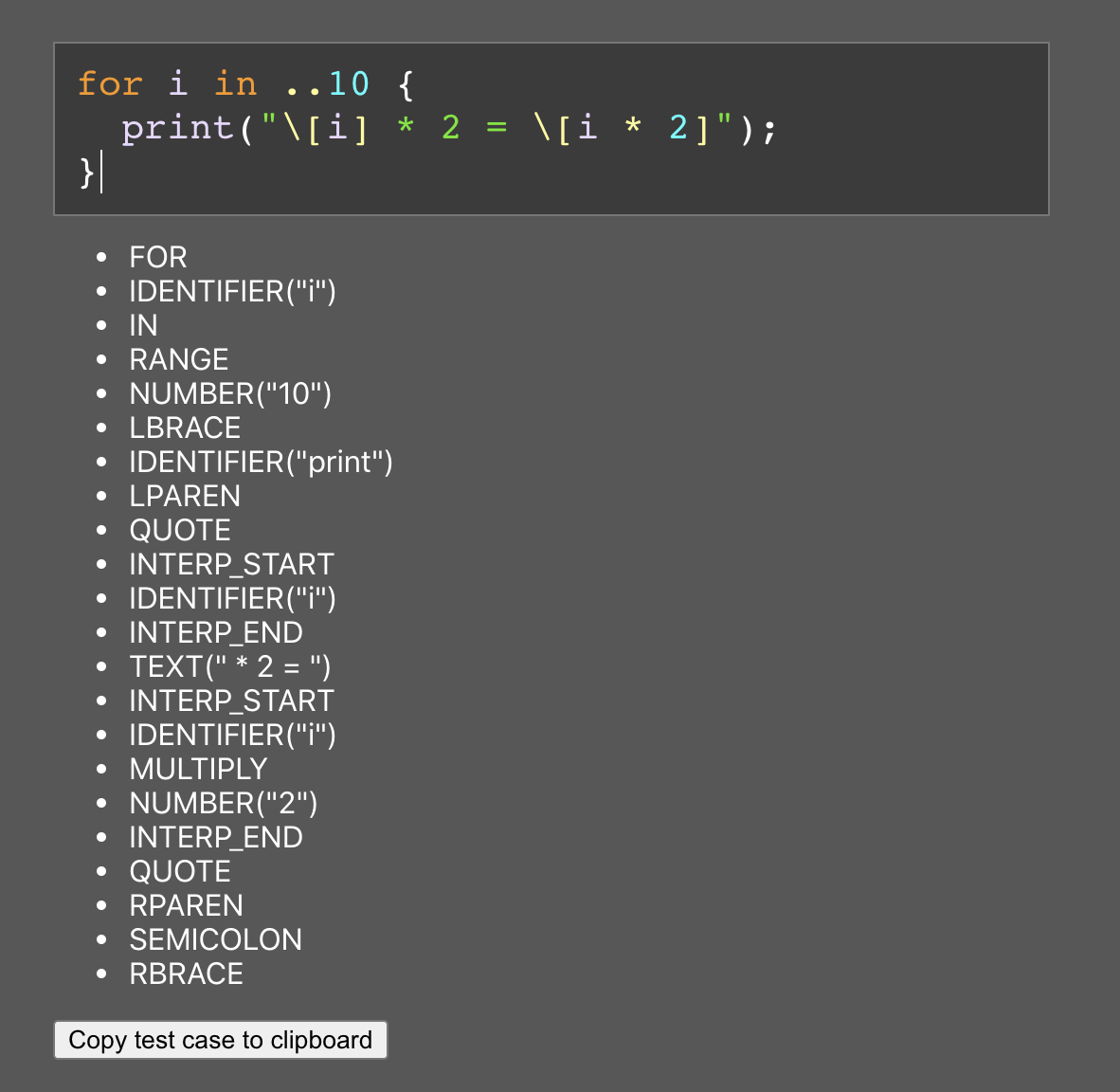

This interface is crude but functional enough to serve my needs well. Right now, I am only focusing on lexing. There’s a free-form text area input at the top. As you type, the source code is processed, and the tokens are outputted below. The source code is syntax highlighted based on the type of token at the given position.

Crucially, there’s a button to copy a test case to the clipboard. I have a unit testing system built on top of the Jest framework. By hitting the copy test case button above, I can then paste the test case in the lexing unit test file. This saves valuable time, as it can be tedious to manually type out each test case, especially for rapidly adding lots of tests. For instance, the above test case looks like the following when pasted:

expect(lex("for i in ..10 {\n print(\"\\[i] * 2 = \\[i * 2]\");\n}")) == [

Token.FOR,

Token.IDENTIFIER("i"),

Token.IN,

Token.RANGE,

Token.NUMBER("10"),

Token.LBRACE,

Token.IDENTIFIER("print"),

Token.LPAREN,

Token.QUOTE,

Token.INTERP_START,

Token.IDENTIFIER("i"),

Token.INTERP_END,

Token.TEXT(" * 2 = "),

Token.INTERP_START,

Token.IDENTIFIER("i"),

Token.MULTIPLY,

Token.NUMBER("2"),

Token.INTERP_END,

Token.QUOTE,

Token.RPAREN,

Token.SEMICOLON,

Token.RBRACE,

]Overall, this system enforces that the lexing result of a given source code input always results in a specified token output. Jest allows me to run unit tests in watch mode, which means every time I modify or add functionality, all my unit tests run again. If anything breaks that previously worked, I’ll have instant feedback. By carefully and meticulously laying out a large amount of unit tests, I can have high confidence that my lexing is working as intended. (And if a new feature doesn’t work, I can just implement it and add a test case to enforce the new behavior).

Summary

I’m using ReasonML to create the Poly programming language because of its strong static type safety and pattern matching. I’m leveraging the React framework to create a minimal visual system to test out lexing/parsing functionality and automate unit test creation using Jest.